I was very excited about this session at MySQL Connect by Daniel Austin of PayPal. I have been talking about this session for a few weeks, on 2 different podcasts, and 2 different blog posts. But I was a bit nervous, because the description was fantastic but the talk itself could have fallen apart.

After seeing the keynote, I knew the talk would be fantastic. I was not disappointed.

Big myths about Big Data:

PayPal problem How do we manage reliable distribution of data across geographical distances?

The first thing people think of when they think of big data is NoSQL. NoSQL provides a solution that relaxes many of the common RDBMS constraints too slow, requires complex data management like Sarbanes-Oxley (SOX), costly to maintain, slow to change and adapt, intolerant of CAP models.

NoSQL are non-relational models usually (not always) like key-value stores. They may be batched or streaming, and they are not necessarily distributed geographically (but they are at PayPal).

Big data myth #1 Big data = nosql

Big data refers to a common set of problems large volumes, high rates of change data/data models/presentation and output.

Often big data isnt just big, its that it needs to be FAST too. Things like near real-time analytics, or mapping complex structures.

3 kinds of big data systems:

1) Columnar key-value systems Hadoop, Hbase, Cassandra, PNUTs

2) Document-based MongoDB, TerraCotta

3) Graph-based Voldemort, FlockDB. These are the more interesting ones, the other 2 are a bit more brute force according to Daniel.

The CAP theorem (Daniel Abadi added latency)

The nice sound byte is:

You cant really trade availability for consistency, because if its not available you have no idea if its consistent or now.

Do you need a big data system?

Whats your problem one of 2:

1) I have too much data and its coming in too fast to handle with any RDBMS

(e.g. sensor data)

2) I have a lot of data distributed geographically and need to be able to read and write from anywhere in near real-time. (PayPals problem)

If you have one of those 2 problems, you may have a problem that can be solved with NoSQL,

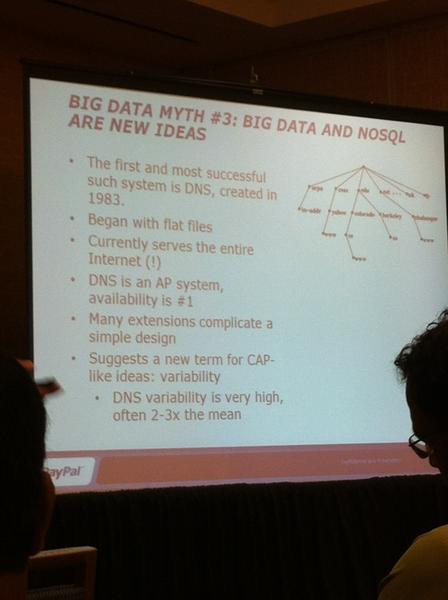

Myth: Big Data and NoSQL are not new ideas. DNS was the first and most successful such system, created in 1983 [Sheeri says: memcached is NoSQL – key/value store]:

YESQL: A counter example. The mission develop a globally distributed db for user-related data. Here are the constraints:

– Must not fail

– Must not lose data (its your MONEY!!)

– Must support transactions

– Must support (some) SQL

– Must WriteRead 32-bit integer globally in 1000ms (1sec)

– Max data volume: 100 TB

– Must scale linearly with costs

Speed constraints:

Max lightspeed distance on earths surface 67ms. Target data available worldwide in 1000ms

They chose to use MySQL Cluster because:

– True HA by design

– with fast recovery

– Supports (some) transactions

– Relational model

– In-memory architecture, which translates to high performance

– Disk storage available for non-indexed data

– APIs to make things easier. Cant just use ODBC or JDBC for this, need high performance APIs.

There are cons to MySQL cluster:

– some semantic limitations on fields (already lifted, but werent when PayPal was looking for a solution)

– Size constraints (about 2 Tb) back when Cluster couldnt do 64-bit, so this is resolved now.

– Hardware constraints

– Higher cost/byte

– Requires reasonable data partitioning

– Higher complexity

They use circular replication/failover with cluster. They have 4 nodes, talking to each other, keeping themselves in sync. If node C fails, node B can talk to node D thats what this pic shows:

When C comes back up you have to move it back to the *end* of the replication flow chain so it can catch up.

Availability defined availability of the entire system is:

Built this in Amazon Web Services (AWS)

– Why AWS? Cheap and easy infrastructure-in-a-box or so they thought!

Services used:

– EC2, CentOS 5.3, small instances for the management (mgm) and query nodes, XL instances for data 44 with 24G each, each tile is 96G RAM)

– Elastic IPs/ELB

– EBS Volumes, used to have to use dd to move images from one AWS data center to another

– S3

– Cloudwatch for monitoring

Architectural tiles developed in a paper with Donald Knuth. Picture on this slide:

– Never separate NDB and SQL

– 2 NDB (aka data) nodes for every SQL node for every 1 management nodes

– For scaling, bring up a new tile, not just a new machine they use a RightScale template

– Failover first to the nearest availability zone, then to the nearest data center

– At least one replica for every availability zone

– No shared nodes

– Some management nodes are redundant, thats OK

– AWS is network-bound at 250 Mb per second!

– Need specific ACL across availability zone boundaries

– AZs not uniform,

– No GSLB global server load balancing

– Dynamic IPs

– ELB sticky sessions are unreliable this is fixed now in AWS

– con: have to upgrade the whole tile at once

Other tech considered:

– Paxos elegant-but-complex consensus-based messaging protocol. Used in Google Megastore, Bing metadata

– Java Query caching queries as serialized objects but not yet working

– Multiple ring architectures, but those are even more complicated.

System r/w performance:

– 23 256 byte char fields

– reads/writes/query speed vs. volume

– data replication speeds

Results:

– global replication in under 350 ms

– 256 bytes read in under 1000 ms worldwide.

Data models and query optimization

– network latency (obvious issue)

– data model requires all segments present in each geo-region

– parameterized (linked) joins adaptive query localization (SIP) technique from Clustra see Frazer Clements blog for details)

they went around the international date line the wrong way at first.commit ordering matters!

Order in which you do writes vs. reads is important! Writes dont always happen at the same time you start them at.

Be careful:

– with eventual consistency-related concepts

– ACID/CAP are not really as well-defined considering how often we invoke them

– MySQL Cluster is good, b/c it has real HA, real SQL. Notable limits around fields, datatypes, but successfully competes with NoSQL for many use cases, often is better

– NoSQL has relatively low maturity, MySQL Cluster is much more mature.

– Dont be a victim of Technological Fashion!

Fugure directions:

– Alternatives using Pacemaker, Heartbeat (using InnoDB, Yves Trudeau at Percona)

– Implement the memcached plugin add simple connection-based persistence to preserve connections during failover

– better monitoring

– -better data node distribution

Summing up on YESQL 0.85:

– it works, better than expected!

– very fast

– very reliable

– reduced complexity since 0.7

– AWS poses cahallenges that private data centers might not have

Only use big data solutions when you have a REAL big data problems. Not all big data solutions are createdeuqal. What tradeoffs are important consstency, fault tolerance, etc.

– You can achieve high performance and aviailability w/out giving up on relational models

Maynard

Keynes on NoSQL Databases

In the long run, we are all dead eventually consistent).

Comments are closed.